AWS Cloud Practitioner For Beginners

By Himanshu Shekhar , 24 May 2022

🌩️ AWS Certified – Associate: Beginner’s Guid

1.1 Introduction – What is AWS?

AWS (Amazon Web Services) is Amazon’s powerful cloud computing platform that allows individuals and businesses to access IT resources — like servers, storage, databases, and software — over the internet instead of owning them physically.

Think of AWS as renting computers and tools from Amazon instead of buying them. You get exactly what you need, use it for as long as you want, and pay only for what you use — just like paying an electricity or mobile bill.

☁️ Physical Server vs Virtual Server

Before we go into the setup, here’s a comparison between AWS physical and virtual servers:

| Concept | Physical Server | Virtual Server |

|---|---|---|

| In AWS | You can’t directly “create” physical servers — AWS manages them in its data centers. But you can rent dedicated physical servers using Dedicated Hosts or Bare Metal Instances. | Virtual servers are standard AWS EC2 instances, created on top of AWS-managed physical hardware using virtualization. |

| Control Level | Full control over hardware (bare metal access). | Virtualized — limited to your instance’s resources. |

| Hardware Access | Direct access to CPU, RAM, Disk (no virtualization layer). | Indirect access — runs through AWS hypervisor (virtualization). |

| Use Case | Compliance, licensing, hardware-level apps (e.g., VMware, antivirus kernel modules). | General workloads, web apps, databases, testing, scaling. |

⚙️ Key Benefits of AWS

- 💰 Pay only for what you use (like utility billing)

- 📈 Automatically scale resources up or down

- 🛡️ High security and reliability

- 🌍 Global availability — access from anywhere

🚀 Why AWS is So Popular

- 💰 Pay-as-you-go: No upfront cost — only pay for what you use.

- ⚡ Scalable: Easily scale resources up or down based on demand.

- 🛡️ Secure: Backed by top-level encryption, compliance, and data protection.

- 🌍 Global Reach: AWS has data centers around the world — access services from anywhere.

🎓 Why Learn AWS Associate?

Becoming an AWS Certified Associate is a great step to start your cloud career. Here’s why:

- 📈 High Demand: Cloud professionals are in huge demand globally.

- 💼 Career Growth: Opens paths to roles like Cloud Architect, Cloud Engineer, and DevOps Specialist.

- 🎯 Strong Foundation: Builds the base for advanced AWS certifications like Professional or Security Specializations.

- 🧠 Hands-on Skills: Learn real AWS tools like EC2, S3, RDS, and Lambda.

- 💰 Cost Optimization (Reserved Instances): Understand how Reserved Instances help reduce AWS compute costs by up to 72% for long-term, predictable workloads.

Main Core Services in AWS (Quick Overview)

AWS has 4 core pillars — everything in AWS is built around these, plus additional categories for Security, Monitoring, and DevOps.

- 🧩 Compute (Power / Processing): Runs your applications, servers, and functions (EC2, Lambda).

- 🗄️ Storage (Memory / Disk Space): Stores data, files, and backups (S3, EBS, Glacier).

- 🌐 Networking & Content Delivery: Connects resources securely and delivers content globally (VPC, CloudFront, Route 53).

- 🧮 Database Services: Manages structured and unstructured data (RDS, DynamoDB, Aurora).

- 🔒 Security & Identity: Controls access and protects your environment (IAM, KMS, WAF, Shield).

- ⚙️ Management & Monitoring: Tracks, audits, and optimizes your AWS usage (CloudWatch, CloudTrail).

- 💻 Developer / DevOps Tools: Automates code building, testing, and deployment (CodePipeline, CodeDeploy).

1.2 What is Cloud Computing?

Cloud computing means using the internet to access IT resources — like servers, storage, databases, and software — without owning them physically.

You just rent what you need from a cloud provider (like AWS, Azure, or Google Cloud) and pay only for what you use.

OR

Cloud computing is the on-demand delivery of IT resources such as servers, storage, databases, networking, analytics, and applications over the internet (“the cloud”) with pay-as-you-go pricing.

Instead of buying, owning, and maintaining physical data centers and servers, you can access technology services like computing power, storage, or databases on-demand from a cloud provider (e.g., AWS, Azure, GCP).

👉 Characteristics of Cloud Computing (NIST 5 Principles – Exam Favorite):

- On-Demand Self Service – Provision resources instantly without requiring human intervention.

- Broad Network Access – Access resources from anywhere using laptops, smartphones, or APIs.

- Resource Pooling – Multiple customers share the same infrastructure securely and efficiently.

- Rapid Elasticity – Scale computing resources up or down automatically as needed.

- Measured Service – Pay only for what you use with metered billing and usage tracking.

Main Types of Cloud Service Models:

- IaaS (Infrastructure as a Service):

- AWS provides raw infrastructure like servers, storage, and networking.

- You manage the OS, apps, and data.

- Examples: EC2, EBS, VPC.

- Analogy: Renting an unfurnished house — you set it up as you like.

- PaaS (Platform as a Service):

- AWS provides infrastructure + platform (runtime, databases, OS).

- You focus on apps without worrying about servers.

- Examples: Elastic Beanstalk, RDS, AWS Fargate.

- Analogy: Renting a furnished apartment — you just move in.

- SaaS (Software as a Service):

- Ready-to-use software over the internet.

- You only use the app — no server or platform management.

- Examples: AWS Chime, AWS WorkMail, Google Workspace, Salesforce.

- Analogy: Staying in a hotel — everything is provided for you.

1.3 AWS Global Infrastructure (Regions, Availability Zones, and Edge Locations)

AWS has built a massive global network of data centers around the world so that cloud services are fast, reliable, and secure — no matter where users are. This global network is divided into three main components:

-

🗺️ 1. AWS Regions

🔹 Definition: A Region is a geographical area that contains multiple, isolated Availability Zones (AZs). Each Region operates independently for security and fault tolerance.

🔹 Key Points:

- Each Region is located in a distinct part of the world (e.g.,

us-east-1in Virginia,ap-south-1in Mumbai). - Regions are physically separated for disaster recovery and high security.

- Each Region consists of multiple data centers grouped into Availability Zones.

Region Name Code Location US East (N. Virginia) us-east-1USA Asia Pacific (Mumbai) ap-south-1India Europe (Frankfurt) eu-central-1Germany 🔹 Use Case: Choose a Region closest to your users to reduce latency and comply with local data residency laws (e.g., store Indian data in India).

- Each Region is located in a distinct part of the world (e.g.,

-

🏢 2. Availability Zones (AZs)

🔹 Definition: An Availability Zone is one or more data centers within a Region, each with its own power, cooling, and networking — built for high availability.

🔹 Key Points:

- Each Region typically has 2 to 6 AZs.

- AZs are connected through high-speed, low-latency fiber networks.

- Deploying apps across multiple AZs ensures fault tolerance and uptime.

🔹 Example (Mumbai Region -

ap-south-1):ap-south-1aap-south-1bap-south-1c

💡 If one AZ fails due to outage or disaster, your applications in other AZs keep running — ensuring high availability.

-

📡 3. Edge Locations

🔹 Definition: Edge Locations are global data centers that cache and deliver content closer to end users — part of AWS CloudFront, Route 53, and Global Accelerator.

🔹 Key Points:

- Used for Content Delivery Network (CDN) services to deliver data, videos, or APIs faster.

- Hundreds of Edge Locations exist across major global cities.

- Reduces latency by serving cached content from the nearest location to users.

🔹 Example: If your website is hosted in

us-east-1but accessed from Delhi, CloudFront delivers content via an Edge Location in Mumbai or Chennai for faster load times.⚡ Edge Locations = Global performance boosters for AWS customers.

1.4 Types of Cloud Deployment Models

- Public Cloud: Shared infrastructure (AWS, Azure, GCP).

- Private Cloud: Dedicated to one organization (on-premises or hosted).

- Hybrid Cloud: Combination of public and private (used by banks, governments).

- Multi-Cloud: Using multiple providers (AWS + Azure + GCP).

1.5 Types of Cloud Service Models (IaaS, PaaS, SaaS, FaaS, CaaS)

Cloud computing services are categorized based on the level of control and management provided to users.

-

A. Infrastructure as a Service (IaaS)

- Provides raw infrastructure: virtual servers, networking, storage, firewalls, and load balancers.

- User controls OS, applications, middleware, runtime, and data.

- Cloud provider manages physical hardware + virtualization layer.

👉 AWS Examples: EC2, EBS, VPC, Elastic Load Balancer.

✅ Advantages: Maximum control, flexibility, and pay-per-use.

⚠️ Disadvantages: Requires technical expertise, manual patching, and security setup.

🏠 Analogy: Renting an unfurnished house — you set it up as you like.

-

B. Platform as a Service (PaaS)

- Provides infrastructure + managed runtime environment.

- Developers focus only on building and running apps — no server or OS management.

- Cloud provider handles scaling, patching, and database management.

👉 AWS Examples: Elastic Beanstalk, RDS, Fargate.

✅ Advantages: Faster development, auto-scaling, automated backups.

⚠️ Disadvantages: Less control, limited customization, and vendor lock-in risk.

🏢 Analogy: Renting a furnished apartment — everything is set up for you.

-

C. Software as a Service (SaaS)

- Fully managed applications delivered over the internet.

- Users don’t manage infrastructure, OS, or platform — just use the app.

- Access via browser or mobile app from anywhere.

👉 AWS Examples: AWS Chime, AWS WorkMail, Amazon Connect, Salesforce.

✅ Advantages: No setup, no maintenance, easy access.

⚠️ Disadvantages: Least control, vendor lock-in, limited customization.

🏨 Analogy: Staying in a hotel — everything is included; you just use the service.

-

D. Function as a Service (FaaS)

- Serverless computing model — upload functions, and AWS runs them automatically when triggered.

- No server management or scaling concerns — runs on demand.

- Pay only when your code executes (cost-efficient).

👉 AWS Examples: AWS Lambda, Step Functions, EventBridge.

✅ Advantages: No servers to manage, automatic scaling, pay-per-execution.

⚠️ Disadvantages: Limited runtime, cold start delays, debugging complexity.

🍔 Analogy: Ordering food delivery — you don’t own a kitchen; you only pay when you order.

-

E. Container as a Service (CaaS)

- Provides a managed platform for running and orchestrating containers.

- Containers bundle apps with dependencies for consistent deployment.

- Cloud provider manages orchestration, scaling, and networking (Kubernetes or Docker).

👉 AWS Examples: Amazon ECS, Amazon EKS, AWS Fargate.

✅ Advantages: Consistent deployments, easier scaling, app isolation.

⚠️ Disadvantages: Requires container knowledge, complex networking, higher costs at scale.

🏙️ Analogy: Renting portable mini-apartments inside a building — isolated yet share base resources.

1.6 AWS Shared Responsibility Model

The AWS Shared Responsibility Model defines how security and compliance tasks are divided between AWS (the cloud provider) and you (the customer).

In simple terms — AWS secures the cloud, while you secure what’s inside the cloud.

⚙️ 1. AWS is Responsible for: “Security of the Cloud”

AWS manages and protects the infrastructure that runs all AWS services.

- 🏢 Physical Security: Protecting data centers, hardware, and facilities.

- 🌐 Network Infrastructure: Routers, switches, firewalls, and connectivity.

- 🧩 Virtualization Layer: Hypervisors and isolation of compute resources.

- 🖥️ Hardware Maintenance: Servers, storage, and networking devices.

- ☁️ Managed Services Security: Security of services like S3, RDS, DynamoDB, etc.

🧍♂️ 2. Customer is Responsible for: “Security in the Cloud”

You control how AWS services are used — so you must secure your data, configurations, and access.

- 🔐 Access Management: Set up IAM users, roles, policies, and MFA.

- 🧾 Data Protection: Encrypt data (in transit & at rest).

- 🛡️ Network Security: Configure firewalls, VPC security groups, and ACLs.

- ⚙️ Operating Systems: Patch, update, and secure EC2 instances.

- 💻 Application Security: Secure your app code, APIs, and configurations.

- 📜 Compliance Settings: Follow privacy regulations like GDPR or HIPAA.

⚖️ 3. Shared Responsibility by Service Type

| Service Type | AWS Responsibility | Customer Responsibility |

|---|---|---|

| IaaS (EC2, EBS, S3) | Physical + Virtual Infrastructure | OS patches, firewall, data encryption |

| PaaS (RDS, Elastic Beanstalk) | Platform + DB Engine Security | Application code, DB access management |

| SaaS (Amazon WorkMail, AWS Managed Services) | Full app + infrastructure | Data access, user permissions |

🧠 4. Real-World Example

Suppose you host a website using EC2 and S3:

- AWS ensures data center security, hardware reliability, and network stability.

- You must patch your OS, secure ports, and configure S3 buckets properly.

📊 5. Summary of Responsibilities

| Responsibility Area | AWS | Customer |

|---|---|---|

| Physical Hardware | ✅ | ❌ |

| Global Network | ✅ | ❌ |

| Virtualization Layer | ✅ | ❌ |

| Operating System | ❌ | ✅ |

| Applications | ❌ | ✅ |

| Identity & Access (IAM) | ❌ | ✅ |

| Data Encryption | ❌ | ✅ |

1.7 Benefits of AWS

AWS provides many benefits to users and businesses, but the three most important ones are:

- ✅ Scalability

- ✅ Cost Efficiency

- ✅ Reliability

⚙️ 1. Scalability

What It Means: Scalability means AWS can automatically increase or decrease computing resources based on your application's demand.

- AWS uses EC2 and Auto Scaling Groups (ASG) to manage sudden traffic changes.

- You can add more servers (scale out) or increase power of existing ones (scale up).

- Prevents downtime during high demand.

💰 2. Cost Efficiency (Pay-As-You-Go)

What It Means: AWS follows a pay-as-you-go model — you pay only for the resources you actually use, not for idle capacity.

- No upfront hardware investment required.

- Automatic scaling saves cost during low traffic.

- Reserved Instances or Savings Plans reduce long-term expenses.

- Free Tier available for testing and learning.

🔒 3. Reliability

What It Means: Reliability ensures your applications and data remain available and protected — even if something fails in the system.

- Data is stored across multiple Availability Zones (AZs) and Regions.

- Most AWS services offer 99.99% uptime.

- Load balancing, replication, and auto-recovery prevent single points of failure.

- Built-in disaster recovery tools protect data automatically.

🧱 Summary Table

| Benefit | Meaning | AWS Features That Support It | Real-World Example |

|---|---|---|---|

| Scalability | Adjusts resources automatically based on demand | Auto Scaling, Elastic Load Balancing | Website scales automatically during festival sales |

| Cost Efficiency | Pay only for what you use | Pay-as-you-go, Savings Plans, EC2 On-Demand | Lower costs during low-traffic periods |

| Reliability | System remains available and fault-tolerant | Multi-AZ Deployment, S3 Replication | App stays online even during outages |

⚙️ Scalable: Grows automatically with your needs.

💰 Cost-Effective: Pay only for what you use.

🔒 Reliable: Works even when parts fail. 📘 Learn more at the official AWS Website.

1.8 AWS Services You Will Learn as a Beginner

Think of AWS like a toolbox — each service is a tool.

- Compute: EC2, Lambda

- Storage: S3, EBS, EFS

- Databases: RDS, DynamoDB

- Networking: VPC, Route 53, CloudFront

- Security: IAM, KMS, Secrets Manager

1.9 Core Concepts of AWS Architecture

- Regions & Availability Zones: Global data centers for redundancy

- High Availability: Keep services running even during failure

- Scalability: Automatically adjust resources (Auto Scaling)

- Cost Optimization: Pay for what you use; use reserved instances

- Security: Use least privilege and encryption best practices

1.10 What is AWS Certification?

AWS Certification proves your knowledge and skills in using AWS. It shows you can design, deploy, and manage applications in the AWS cloud.

Certification Levels:

- Foundational – Beginner

- Associate – Intermediate (focus of this guide)

- Professional – Advanced

- Specialty – Expert in a specific domain

1.11 AWS Associate Exam Basics

- Format: Multiple-choice & multiple-answer

- Time: 130 minutes

- Domains:

- Design Resilient Architectures

- Design High-Performing Architectures

- Design Secure Applications

- Design Cost-Optimized Architectures

- Tip: Use AWS Free Tier for hands-on practice

1.12 How to Start Learning AWS as a Beginner

- Sign up for AWS Free Tier

- Learn core services: EC2, S3, RDS, VPC, Lambda

- Understand cloud fundamentals: Regions, AZs, IAM

- Follow tutorials and deploy small projects

- Practice with mock exams

1.13 Simple Analogy

Think of AWS as a digital Lego set — each service is a Lego block. You can combine EC2, S3, Lambda, and VPC blocks to build anything from websites to enterprise systems. The AWS Associate exam tests your ability to connect these blocks securely and efficiently.

Module 02 : Amazon EC2 – Instances (Easy & Detailed Notes)

Amazon EC2 (Elastic Compute Cloud) is the core compute service of AWS that lets you launch virtual servers on demand. This module explains EC2 concepts in a very simple and beginner-friendly way — including instance types, AMIs, security groups, EBS storage, key pairs, networking, load balancing, auto scaling, and pricing options. By the end of this module, you will understand how to deploy, secure, monitor, and scale EC2 instances effectively for real-world applications.

1. Amazon EC2 – Instances (Easy & Detailed Notes)

EC2 (Elastic Compute Cloud) is a virtual server in the AWS cloud. It lets you run applications, host websites, and store data — all on Amazon’s infrastructure. Let’s break down what each word in “Elastic Compute Cloud” means in a simple way 👇

⚙️ 1. Elastic

Meaning: “Elastic” means flexible — it can automatically scale up or down depending on your needs.

In EC2: You can increase resources (scale up) when your app or website has high traffic. You can reduce resources (scale down) when demand drops — helping you save money.

🧠 Think of it like a rubber band — it stretches when you need more power and contracts when you don’t.

📘 Example: If your website suddenly gets 10,000 visitors in one hour, AWS automatically launches more EC2 instances. When the traffic goes down, those extra instances are stopped or terminated to reduce costs.

🖥️ 2. Compute

Meaning: “Compute” refers to the processing power — like CPU, RAM, and GPU — that runs your applications.

In EC2: You decide how much computing power you need (number of CPUs, amount of RAM, or GPU for graphics tasks). AWS then provides you a virtual machine that performs those tasks.

📘 Example: Running a web server, hosting a game server, or executing a data analysis script — all need compute resources. EC2 gives you that virtual computing power instantly.

☁️ 3. Cloud

Meaning: “Cloud” means on-demand access to IT resources (like servers, storage, and databases) through the internet — without owning physical hardware.

In AWS Cloud: You don’t buy servers; you rent them from Amazon’s data centers. You can access your resources anytime, anywhere using the internet. AWS handles all the maintenance — power, cooling, and hardware — while you just focus on using it.

📘 Example: Instead of buying a physical server for your app, you simply launch an EC2 instance from your AWS account. Then, you connect to it via SSH or a browser-based console and start using it right away.

✅ In Simple Words:

- Elastic → It grows or shrinks automatically as per your need.

- Compute → It’s the brainpower (CPU/RAM) that runs your programs.

- Cloud → You rent servers online instead of buying them physically.

So, Amazon EC2 simply means — a flexible (elastic) virtual computer (compute) that runs in Amazon’s cloud. It’s the foundation for almost everything you do in AWS.

🔹 Why EC2 Instances Are Important

| Feature | Description |

|---|---|

| 💻 Computing Power | Run apps, websites, and databases easily. |

| ⚙️ Scalability | Increase or decrease instances as needed. |

| 💰 Pay as You Go | Only pay for the time your instance is running. |

| 🌍 Global Availability | Launch instances in multiple AWS regions worldwide. |

🔹 Basic Components of an Instance

| Component | Description |

|---|---|

| AMI (Amazon Machine Image) | OS template — contains software/config (e.g., Amazon Linux, Ubuntu, Windows). |

| Instance Type | Defines CPU, RAM, and storage (e.g., t2.micro, m5.large). |

| Key Pair | Used for secure SSH (Linux) or RDP (Windows) login. |

| Security Group | Virtual firewall controlling inbound/outbound traffic. |

| Elastic IP | Static public IP address assignable to an instance. |

| EBS Volume | Block storage drive attached to store files permanently. |

🔹 Types of Instance Families (Based on Use Case)

| Family | Example | Best For |

|---|---|---|

| 🧮 General Purpose | t3, m6i | Balanced compute, memory, networking. |

| ⚡ Compute Optimized | c5, c6g | High CPU tasks (gaming, analytics). |

| 💾 Memory Optimized | r5, x1e | Databases and in-memory caching. |

| 🎥 Storage Optimized | i3, d2 | Big data, backups, and heavy I/O. |

| 💻 Accelerated Computing | p3, g5 | Machine learning and GPU rendering. |

🔹 Instance Lifecycle

- 🚀 Launch – Create a new instance from an AMI.

- 🟢 Running – Instance is active and billed per second/hour.

- ⏸️ Stop – Turned off, data in EBS volume remains safe.

- ❌ Terminate – Instance deleted, data lost unless backed up.

+----------+ +----------+ +------------+

| Launch | ----> | Running | ----> | Stopped |

+----------+ +----------+ +------------+

\ |

\--------------------------------->|

Terminate

🔍 Verifying Your EC2 Instance Configuration

After connecting to your EC2 instance using SSH, run the following commands to verify system configuration, hardware details, and network settings.

1️⃣ Check Operating System

Command:

cat /etc/os-releaseExample Output:

NAME="Ubuntu"

VERSION="22.04.3 LTS (Jammy Jellyfish)"

ID=ubuntu

VERSION_ID="22.04"

2️⃣ Check Memory (RAM)

Command:

free -mExample Output:

total used free

Mem: 1024 150 874

Swap: 0 0 0

3️⃣ Check CPU Information

Command:

lscpuExample Output:

Architecture: x86_64

CPU(s): 1

Model name: Intel(R) Xeon(R)

CPU MHz: 2300.000

4️⃣ Check Network Configuration

Command:

ip aExample Output:

eth0: inet 172.31.45.12/20

lo: inet 127.0.0.1/8

🔧 Additional Verification Commands

5️⃣ Check Disk Usage

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/root 8G 1.2G 7G 15% /

6️⃣ Check Hostname

hostname7️⃣ Check System Uptime

uptime -p8️⃣ Check Running Services

systemctl list-units --type=service --state=running9️⃣ Check Open Ports

ss -tulnp🔟 Check Firewall Status (Ubuntu)

sudo ufw status1️⃣1️⃣ Get EC2 Metadata (Instance Details)

curl http://169.254.169.254/latest/meta-data/1️⃣2️⃣ Verify Public IP

curl ifconfig.me🔹 Instance Pricing Models

| Model | Description |

|---|---|

| On-Demand | Pay only when the instance runs — flexible, no commitment. |

| Reserved | 1–3 year commitment; lower cost for long-term workloads. |

| Spot | Buy unused capacity at discount; can be interrupted anytime. |

| Dedicated Host | Physical server exclusively for your organization. |

🔹 Common Example – Hosting a Website

- Go to EC2 Dashboard → Launch Instance

- Choose AMI (e.g., Ubuntu)

- Select Instance Type (e.g.,

t2.micro– Free Tier) - Add Key Pair for SSH login

- Configure Security Group (allow

HTTP,HTTPS,SSH) - Launch instance → Connect using PuTTY or SSH

- Install Apache or Nginx → Website live 🌐

- Highly Scalable

- Flexible Configuration

- Secure (Key Pair + Security Group)

- Cost-Effective

- Easy to Automate (via AWS CLI or SDKs)

🧠 Simple Summary

| Term | Meaning |

|---|---|

| EC2 Instance | Virtual server in AWS |

| AMI | Pre-configured image to launch instance |

| Key Pair | For secure login |

| Security Group | Virtual firewall |

| Elastic IP | Permanent public IP address |

| EBS Volume | Attached storage |

1.2. How to Create an IAM User in AWS

IAM (Identity and Access Management) helps you securely manage access to AWS services.

🎯 Purpose

- ✅ Create users and groups

- ✅ Manage permissions to AWS resources

- ✅ Control who can access what

🪟 Step 1: Open the IAM Console

- Go to AWS Console → IAM.

- Click

Usersin the left menu → Create User.

🧍 Step 2: Add User Details

- User name: Example →

developer-shekhar - Access Type:

- ☁️ Programmatic Access: via CLI / API

- 🖥️ Console Access: via AWS web login

- Set password → choose “Require password reset on first login”

🔐 Step 3: Set Permissions

- Option 1: Attach existing policy (e.g.,

AdministratorAccess) - Option 2: Add user to group (recommended for multiple users)

- Option 3: Copy permissions from another user

- Option 4: Create custom policy (JSON)

🏷️ Step 4: Add Tags (Optional)

Tags help organize users — e.g., Project=Test, Department=IT.

🧾 Step 5: Review & Create

- Review details and click Create user.

- Save the Access Key ID and Secret Access Key (if programmatic access enabled).

🧪 Step 6: Test IAM User

- Log out of the root account.

- Sign in with IAM user credentials.

- Verify allowed services and permissions.

1.3. AWS EC2 Key Pair — Complete Explanation

A Key Pair in AWS is used for secure login to your EC2 instances — instead of passwords.

🔑 1. What is a Key Pair?

It’s a combination of:

- 🔓 Public Key → stored inside AWS

- 🔐 Private Key (.pem/.ppk) → downloaded and kept by you

📘 2. Why It’s Needed

- Used for SSH connection to Linux servers.

- Ensures secure, password-free login.

- Without the private key, you cannot access your instance.

⚠️ 3. Does Key Pair Depend on Availability Zone?

Many beginners think that a Key Pair is limited to an Availability Zone (AZ), but that is NOT correct.

🌍 Key Pair Scope → REGION Level

- A Key Pair belongs to one **AWS Region** (e.g., ap-south-1).

- It can be used in ALL Availability Zones inside that Region:

- • ap-south-1a

- • ap-south-1b

- • ap-south-1c

💚 So When Does a Key Pair “Not Work”?

Only in these cases:

- ❌ You selected a different Region (e.g., created key in ap-south-1 but instance in us-east-1)

- ❌ You lost or deleted the private key (.pem file)

- ❌ Wrong file permission on your PC (must be chmod 400)

- ❌ You entered the wrong username (e.g., ec2-user, ubuntu, centos)

🌍 4. Difference Between AWS Region & Availability Zone (Very Easy Explanation)

Before working with EC2, VPC, or Key Pairs, you must clearly understand the difference between an AWS Region and an Availability Zone (AZ). This confusion is common among beginners.

🟦 What is an AWS Region?

A Region is a geographical location like a country or large area. Example: Mumbai, Singapore, London, Virginia.

- 🌎 A region contains multiple Availability Zones.

- 🔐 Key Pairs, Snapshots, AMIs are created at the region level.

- 💡 Data never leaves a region unless you move it.

🟩 What is an Availability Zone (AZ)?

An Availability Zone is a separate datacenter inside a region. A region has 2 to 6 AZs.

- 🏢 AZs are physically separate datacenters.

- 🔌 Each AZ has its own power, network, cooling.

- 🛡️ Designed so if one AZ fails, others continue working.

• ap-south-1a

• ap-south-1b

• ap-south-1c

📊 Region vs Availability Zone (Quick Difference)

| Feature | AWS Region | Availability Zone (AZ) |

|---|---|---|

| Definition | Geographical area (country/continent) | Datacenter inside a region |

| Example | ap-south-1 (Mumbai) | ap-south-1a, ap-south-1b |

| Number | ~30+ regions | 2–6 AZs per region |

| Scope of Key Pair | Region-level | Not AZ-specific |

| Network Latency | High between different regions | Very low between AZs |

| Used For | Choosing where your data lives | High availability and failover |

🧠 Super Easy Analogy (School Example)

Think of an AWS Region as a school and Availability Zones as classrooms.

- 🏫 One school = Region

- 🏠 Multiple classrooms = AZs

- If one classroom has a problem, the school still works → high availability

🪟 5. Create a Key Pair (Console Method)

- Go to EC2 Dashboard → Key Pairs.

- Click Create Key Pair.

- Choose:

- Name: e.g., my-aws-key

- Type: RSA or ED25519

- Format: PEM (Linux/macOS) or PPK (Windows)

- Download the private key file — only once!

💻 6. Connect to EC2 Instance

- Find Public IP in EC2 dashboard.

- Use SSH command:

ssh -i "MyKeyPair.pem" ec2-user@ - For Ubuntu AMI:

ssh -i "MyKeyPair.pem" ubuntu@

🧠 7. Best Practices

- 🗝️ Keep it private — never share your

.pemfile. - 📂 Store backups safely (e.g., encrypted USB).

- 🔁 Use separate keys for Dev/Test/Prod.

- 🧼 Delete unused keys regularly.

📋 8. Common Commands (AWS CLI)

aws ec2 describe-key-pairs

aws ec2 delete-key-pair --key-name OldKey

aws ec2 create-key-pair --key-name MyKeyPair --query 'KeyMaterial' --output text > MyKeyPair.pem

chmod 400 MyKeyPair.pem

2.1a Amazon EBS – Elastic Block Store

Amazon EBS (Elastic Block Store) provides block-level storage for EC2 instances. Think of EBS as the hard disk of your virtual machine. It stores OS files, application data, logs, databases, and more.

🔹 Key Features of EBS

- 🔒 Durable – 99.999% availability

- ⚡ High Performance – suitable for databases & applications

- ♻ Scalable – increase storage anytime

- 📸 Supports Snapshots for backups

- 🔁 Attach/Detach volumes between instances

- 🚀 Integrated with Auto Scaling & EC2

🔹 EBS vs Instance Store

| Feature | EBS | Instance Store |

|---|---|---|

| Persistence | Persistent (survives stop/start) | Temporary (deleted on stop/terminate) |

| Use Case | OS, apps, DB | Cache, temporary data |

| Backup | Snapshots supported | No backup support |

2.1b How to Create an EBS Volume (Step-by-Step)

An EBS volume can be created from the AWS Management Console or using the AWS CLI. Follow the steps below to create and attach a new EBS volume to your EC2 instance.

🖥️ 1️⃣ Create an EBS Volume from AWS Console

- Go to AWS Console → EC2 Dashboard

- In the left menu, click Elastic Block Store → Volumes

- Click Create Volume

- Choose Volume Type:

- gp3 (General Purpose SSD) — Default

- io2 — High-performance databases

- st1 — Big data, streaming

- sc1 — Cold/infrequent access

- Enter Size (Example: 8 GiB)

- Select Availability Zone ⚠ Must match your EC2 instance AZ

- Choose Encryption (optional)

- Click Create Volume

📎 2️⃣ Attach the Volume to an EC2 Instance

- After creating the volume → Select it

- Click Actions → Attach Volume

- Select your EC2 instance

- Choose a device name (Example:

/dev/sdf) - Click Attach

💽 3️⃣ Format & Mount the Volume (Inside EC2)

SSH into your EC2 instance and run:

👉 Check if the new disk is detected:

lsblk👉 Format the disk:

sudo mkfs -t xfs /dev/sdf👉 Create a mount directory:

sudo mkdir /data👉 Mount the volume:

sudo mount /dev/sdf /data👉 Verify:

df -h/etc/fstab for persistence.

💻 4️⃣ Create an EBS Volume Using AWS CLI

aws ec2 create-volume \

--availability-zone ap-south-1a \

--size 10 \

--volume-type gp3

📎 Attach the Volume (CLI)

aws ec2 attach-volume \

--volume-id vol-1234567890 \

--instance-id i-0123456789 \

--device /dev/sdf

🪟 How to Create & Use an EBS Volume on Windows EC2

Windows EC2 instances handle new EBS volumes differently from Linux. Once the volume is created and attached, you must initialize the disk, create partitions, format it (NTFS/ReFS), and assign a drive letter using **Disk Management**, **DiskPart**, or **PowerShell**.

🖥️ 1️⃣ Create a New EBS Volume via AWS Console

This step is identical for Windows and Linux:

- Open AWS Console → EC2 Dashboard

- Go to Elastic Block Store → Volumes

- Click Create Volume

- Select Volume Type:

- gp3 — Best for general Windows workloads

- io2 — High IOPS for SQL Server / Exchange

- st1/sc1 — Not recommended for Windows OS drives

- Enter Size (Example: 20 GiB)

- Select the same Availability Zone as your instance

- Optional: Enable Encryption (KMS)

- Click Create Volume

📎 2️⃣ Attach the Volume to Your Windows EC2 Instance

- Select the newly created volume

- Click Actions → Attach Volume

- Select your Windows EC2 instance

- Device name usually appears as

/dev/sdf(AWS name) - Click Attach

💽 3️⃣ Initialize, Format & Assign Drive Letter (Windows OS)

Now log in to Windows EC2 using RDP, then follow the steps below.

🧭 Method 1: Using Disk Management (GUI)

- Press Windows + R, type:

diskmgmt.msc - Find the disk labeled Unknown / Not Initialized

- Right-click → Initialize Disk

- Select partition style:

- GPT — Recommended for modern Windows versions

- MBR — Only for legacy systems

- Right-click on Unallocated Space → New Simple Volume

- Choose a drive letter (Ex:

E:) - Select filesystem:

- NTFS — Best for general use

- ReFS — For Windows Server Storage Spaces

- Click Finish

💻 Method 2: Using DiskPart (Command Line)

Run the following commands in an elevated Command Prompt:

diskpart

list disk

select disk 1

attributes disk clear readonly

online disk

convert gpt

create partition primary

format fs=ntfs quick

assign letter=E

exit

list disk to verify.

⚡ Method 3: Using PowerShell (Recommended for automation)

Get-Disk | Where-Object PartitionStyle -Eq "RAW" | Initialize-Disk -PartitionStyle GPT

New-Partition -DiskNumber 1 -UseMaximumSize -AssignDriveLetter |

Format-Volume -FileSystem NTFS -NewFileSystemLabel "DataDisk"

🚀 5️⃣ EBS Best Practices for Windows

- Always enable CloudWatch Disk Metrics for monitoring

- Use gp3/io2 for Windows Server workloads

- Never use st1/sc1 for Windows boot volumes

- Enable Volume Shadow Copy (VSS) for backups

- Use Disk Defragmenter weekly for NTFS volumes

- Avoid ReFS unless required

- Always create AMI backups before resizing volumes

🛠️ 6️⃣ Troubleshooting (Windows)

- Disk not visible?

→ Run:

Get-Diskin PowerShell - Disk shows “Offline (Policy)”?

→ Run:

Set-Disk -Number 1 -IsOffline $false - GPT/MBR warning? → Use GPT for modern Windows Server

- Cannot assign drive letter? → Check if letter already in use

2.1c EBS Volume Types (Use Cases & Comparison)

AWS provides multiple EBS volume types optimized for performance, cost, and workload requirements.

🔹 SSD-Based Volumes (High Performance)

| Type | Description | Best For |

|---|---|---|

| gp3 (General Purpose SSD) | Offers balanced price/performance | Boot volumes, general workloads |

| io2 / io2 Block Express | Highest IOPS SSD volume | Databases, mission-critical apps |

🔹 HDD-Based Volumes (Cost-Optimized)

| Type | Description | Best For |

|---|---|---|

| st1 (Throughput Optimized HDD) | High throughput for large data reads/writes | Big data, analytics, log processing |

| sc1 (Cold HDD) | Lowest cost HDD volumes | Infrequently accessed data |

2.1d EBS Snapshots (Backup & Restore)

A Snapshot is a backup of your EBS volume stored in Amazon S3. Snapshots allow you to restore data, create new volumes, or copy backups across regions.

🔹 Snapshot Features

- 📦 Back up EBS volumes anytime

- 🚀 Restore a snapshot into an EBS volume

- 🌍 Copy snapshots across regions (DR setup)

- ⚡ Fast Snapshot Restore (FSR) for instant availability

- 🔁 Automate using Lifecycle Manager

🔹 Common Snapshot Commands

aws ec2 create-snapshot --volume-id vol-12345 --description "Backup-1"

aws ec2 describe-snapshots --owner self

aws ec2 delete-snapshot --snapshot-id snap-12345

2.1e EBS Lifecycle Manager (DLM) – Automated Backups

AWS Data Lifecycle Manager (DLM) automatically creates, retains, and deletes EBS snapshots based on policies you define.

🔹 What You Can Automate with DLM

- 📆 Daily / Weekly snapshot creation

- 🗂 Retention policy (keep for N days)

- 🔁 Deletion of old snapshots

- 🌍 Cross-Region copy

- 🚀 Automate FSR-enabled snapshots

🔹 Example Use Case

- Create snapshot every 24 hours

- Retain 7 snapshots

- Tag snapshots for tracking

🔹 CLI Example – Create DLM Policy

aws dlm create-lifecycle-policy \

--execution-role-arn arn:aws:iam::123456789012:role/service-role/AWSDataLifecycleManagerDefaultRole \

--description "Daily backups" \

--state ENABLED \

--policy-details file://policy.json

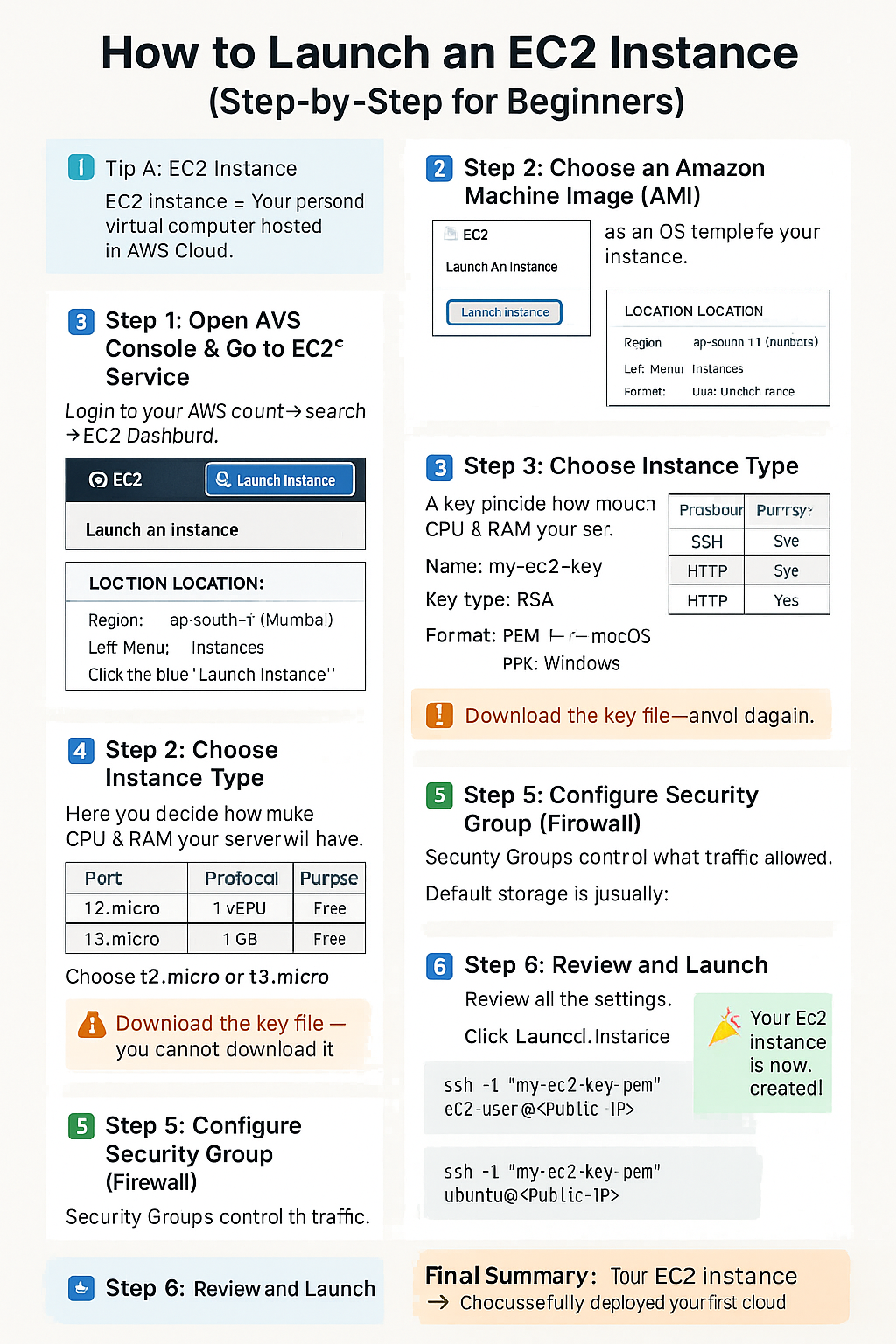

2.2 How to Launch an EC2 Instance (Step-by-Step for Beginners)

Let’s walk through how to actually launch and connect to an EC2 instance in AWS — from start to finish. This guide uses the AWS Management Console, perfect for beginners 🎓.

- An AWS Account

- A verified email and payment method

- IAM User with

EC2FullAccess permissions

🪟 Step 1: Open the EC2 Console

- Login to AWS Console.

- Search for EC2 in the search bar.

- Click EC2 → You’ll reach the EC2 Dashboard.

https://console.aws.amazon.com/ec2/

🖥️ Step 2: Click “Launch Instance”

This starts the setup wizard to create your virtual server.

⚙️ Step 3: Configure Instance Basics

- 🧩 Name: Enter something like

my-first-ec2 - 🪟 Application/OS Image (AMI): Choose

Amazon Linux 2023orUbuntu 22.04 - 💻 Instance Type: Select

t2.micro(Free Tier eligible) - 🔑 Key Pair: Create or select existing key (used for SSH login)

- 🔒 Network Settings: Allow:

- SSH (port 22) → for remote access

- HTTP (port 80) → for website

- HTTPS (port 443) → for secure site

- 💾 Storage: Default 8 GB is fine for practice

🚀 Step 4: Launch the Instance

- Review all configurations.

- Click Launch Instance.

- Wait a few seconds until the instance state = Running.

🌐 Step 5: Connect to Your Instance

- Select your instance → Click Connect.

- Choose “SSH client” tab.

- Follow the SSH command example shown.

💻 Example for Linux/Mac Terminal:

ssh -i "my-key.pem" ec2-user@

💻 Example for Windows (PuTTY):

- Convert

.pem→.ppkusing PuTTYgen. - Open PuTTY → Host Name:

ec2-user@ - Go to Connection → SSH → Auth → Browse and select your

.ppkfile. - Click “Open” → You’re connected!

📦 Step 6: Install a Web Server (Optional)

Once logged in, you can install Nginx or Apache to host a site.

# For Amazon Linux / RHEL

sudo yum update -y

sudo yum install httpd -y

sudo systemctl start httpd

sudo systemctl enable httpd

echo "Hello from EC2!" | sudo tee /var/www/html/index.html

# For Ubuntu

sudo apt update

sudo apt install apache2 -y

sudo systemctl start apache2

sudo systemctl enable apache2

🛑 Step 7: Stop or Terminate When Done

- Go to EC2 Dashboard → Instances

- Select the instance → Actions → Instance State

- Choose:

- Stop → Pauses instance (no billing for compute)

- Terminate → Deletes instance and data permanently

🧠 Step 8: Understand the Behind-the-Scenes

- 💽 AMI — base OS template.

- 🔢 Instance Type — defines hardware (CPU/RAM).

- 🔒 Security Group — defines network access.

- 🗝️ Key Pair — secure login credentials.

- 📊 Elastic IP — permanent IP (optional).

- 📁 EBS Volume — persistent storage.

✅ Quick Summary Table

| Step | Action | Purpose |

|---|---|---|

| 1 | Open EC2 Console | Access EC2 service |

| 2 | Launch Instance | Start instance creation wizard |

| 3 | Choose AMI & Type | Select OS & hardware |

| 4 | Set Key Pair & Security | Ensure secure access |

| 5 | Launch & Connect | Boot up and SSH in |

| 6 | Install Web Server | Host an app or website |

| 7 | Stop/Terminate | Manage billing & lifecycle |

2.3 EC2 User Data – Bootstrap Script (Amazon Linux & Ubuntu)

When launching an EC2 instance, you can use User Data to automatically configure the server during the first boot. This is perfect for installing software, enabling services, creating files, or deploying a basic website.

🟦 Amazon Linux 2 – Bootstrap Script

Paste this script into the EC2 "User Data" box when launching a new Amazon Linux instance.

#!/bin/bash

sudo su

yum install httpd -y

systemctl start httpd

systemctl enable httpd

cd /var/www/html

echo "This is my Bootstrapp Server" > index.html

- Installs Apache (httpd)

- Starts and enables the service

- Creates a simple homepage at

/var/www/html/index.html

🟩 Ubuntu Server – Bootstrap Script

Use this script when launching an Ubuntu EC2 instance.

#!/bin/bash

sudo su

apt update -y

apt install apache2 -y

systemctl start apache2

systemctl enable apache2

cd /var/www/html

echo "Welcome to Arena Bootstrap Server" > index.html

- Installs Apache2 (Ubuntu version)

- Starts and enables Apache service

- Adds a custom homepage

💡 Tips for Using User Data

- Use

#!/bin/bashalways at the top - Ensure the instance security group allows port 80

- For Amazon Linux 2023, use dnf install instead of yum

- User Data executes only on FIRST BOOT unless configured otherwise

2.4 What are EC2 Pricing Models?

When you use Amazon EC2 (Elastic Compute Cloud), you are basically renting virtual servers (instances) on AWS to run your applications, websites, or systems. But — you can choose how to pay for that computing power.

- ⏱️ How long you want to use the instance

- 📊 How predictable your workload is

- 💰 How much you want to save

There are mainly three traditional pricing models:

- 👉 On-Demand

- 👉 Reserved Instances

- 👉 Spot Instances

And one modern option called Savings Plan.

🟢 1. On-Demand Instances

🔧 Use Cases:

- Testing or learning projects

- Short-term applications

- Unpredictable workloads

- Development and staging environments

Suppose you start an EC2 instance for 5 hours → You’ll pay only for 5 hours of compute time.

Stop or terminate → billing stops.

It’s like using a taxi — you pay only for the ride.

✅ Advantages:

- No commitment or contract

- Start and stop anytime

- Very flexible and simple

- Great for beginners or testing

⚠️ Disadvantages:

- Highest hourly cost

- Not cost-effective for 24/7 usage

💰 When to Choose: For new users, experiments, or unpredictable workloads.

🟠 2. Reserved Instances (RI)

- All Upfront – Maximum discount

- Partial Upfront – Balanced cost

- No Upfront – Monthly payment, lowest discount

🔧 Use Cases:

- Long-running production servers

- Databases and backend systems

- Predictable workloads (websites, enterprise apps)

A company runs its website 24/7 → buys a 3-year RI → saves up to 70%.It’s like buying a car instead of renting daily.

✅ Advantages:

- Huge long-term savings

- Guaranteed capacity

- Flexible or Standard options

⚠️ Disadvantages:

- Lock-in for 1–3 years

- Limited flexibility in instance type or region

💰 When to Choose: For predictable workloads or long-term production apps.

🔵 3. Spot Instances

🔧 Use Cases:

- Batch or background processing

- Machine Learning training

- Data analytics

- Testing and development (non-critical)

Training an AI model using Spot Instances — 90% cheaper. If AWS reclaims capacity, the instance stops automatically.It’s like standby flight — cheap but uncertain.

✅ Advantages:

- Lowest cost (up to 90% savings)

- Ideal for flexible workloads

⚠️ Disadvantages:

- Can be interrupted anytime

- Not for production workloads

💰 When to Choose: For temporary or interruptible workloads needing cost efficiency.

⚙️ (Bonus) AWS Savings Plans

A modern, flexible pricing model offering RI-like discounts but with more freedom. You commit to a spend amount per hour ($/hr) for 1 or 3 years, and AWS applies discounts automatically across eligible services (EC2, Lambda, Fargate).

🧠 Summary Table

| Feature | On-Demand | Reserved Instance | Spot Instance |

|---|---|---|---|

| Payment Type | Pay per use | 1- or 3-year commitment | Bid-based (spare capacity) |

| Discount | None | Up to 75% | Up to 90% |

| Flexibility | Very High | Medium | Low |

| Reliability | 100% | 100% | May terminate anytime |

| Best For | Testing, short-term apps | Long-term stable apps | Cheap, flexible batch jobs |

| Billing Stops When Stopped? | ✅ Yes | ❌ No | ✅ Yes (but may stop anytime) |

🔍 Visual Diagram (Text-based)

Cost Comparison ↓

Spot (💸 Cheapest)

↓

Reserved (💰 Affordable)

↓

On-Demand (💵 Expensive)

Commitment Level ↑

On-Demand (None)

↑

Spot (Flexible)

↑

Reserved (Fixed)

| You Want... | Choose... |

|---|---|

| Full freedom and flexibility | 🟢 On-Demand |

| Long-term savings and stability | 🟠 Reserved Instance |

| Ultra-low cost for temporary use | 🔵 Spot Instance |

2.5 AWS Application Load Balancer (ALB)

An Application Load Balancer (ALB) is a Layer 7 (Application Layer) service that intelligently distributes HTTP and HTTPS traffic across multiple targets like EC2 instances, containers, IPs, or Lambda functions in multiple Availability Zones.

- ✅ Highly Available

- ⚙️ Scalable and Flexible

- 🔒 Secure (supports SSL/TLS and WAF)

- 🌐 Smart Routing (URL, Host, Header-based)

🔹 1. Types of AWS Load Balancers

| Type | Layer | Use Case |

|---|---|---|

| Application Load Balancer (ALB) | Layer 7 | HTTP/HTTPS routing (Web apps, APIs) |

| Network Load Balancer (NLB) | Layer 4 | TCP/UDP traffic (Gaming, Real-time apps) |

| Classic Load Balancer (CLB) | Layer 4 & 7 | Legacy workloads |

🏗️ 2. ALB Architecture Overview

Internet

↓

┌────────────┐

│ ALB (DNS) │ ← Distributes requests

└────────────┘

↓

┌──────────┴──────────┐

│ │

EC2-1 EC2-2

(Targets in Target Group)

Clients access via DNS (e.g. myapp-alb-123456.ap-south-1.elb.amazonaws.com).

ALB forwards traffic based on listener rules to the registered targets.

⚙️ 3. Key ALB Components

| Component | Description |

|---|---|

| Load Balancer | Entry point for all incoming traffic. |

| Listener | Protocol + Port (e.g., HTTP:80, HTTPS:443) listener. |

| Rules | Define how traffic is routed (Path, Host, Header). |

| Target Group | Group of registered targets receiving traffic. |

| Health Check | Regularly checks target status before routing traffic. |

🎯 4. Listener Rules (Routing Logic)

ALB inspects requests and routes traffic using listener rules:

| Rule Type | Example | Description |

|---|---|---|

| Host-based | api.example.com → API servers | Routes traffic by domain name |

| Path-based | /images/* → image servers | Routes by URL path |

| Header-based | User-Agent=Mobile | Routes by HTTP headers |

| Query-based | ?type=premium | Routes by query parameters |

🧩 5. Target Groups

Each Target Group defines target type, port, and health check configuration.

- Type: EC2, IP, Lambda, ECS Containers

- Port: Example → 80 or 8080

- Health Check Path: /health

- Healthy Threshold: 5

- Unhealthy Threshold: 2

🚀 6. Key ALB Features

- 🌐 Content-based Routing — by URL, host, or header.

- 🧭 Sticky Sessions — session affinity per target group.

- 🔐 SSL/TLS Termination — via AWS Certificate Manager (ACM).

- ⚡ HTTP/2 & WebSocket — modern and real-time support.

- 🧩 Integration with ECS, Lambda, WAF — for microservices and security.

- 📜 Access Logs — stored in S3 for auditing.

🪟 7. Steps to Create an Application Load Balancer

A. Using AWS Console

- Open EC2 Dashboard → “Load Balancers” → Create Load Balancer

- Select Application Load Balancer

- Set:

- Name:

my-alb-demo - Scheme: Internet-facing

- Listeners: HTTP (80) / HTTPS (443)

- AZs: At least 2

- Target Group: Type - Instances, Health Check - /

- Name:

- Review & Create

B. Using AWS CLI

aws elbv2 create-load-balancer \

--name my-alb-demo \

--subnets subnet-123456 subnet-789012 \

--security-groups sg-123456 \

--scheme internet-facing \

--type application \

--ip-address-type ipv4

🌍 8. Example: Path-Based Routing

| URL | Target Group | Backend Service |

|---|---|---|

| myapp.com/ | TG-Frontend | Web Frontend |

| myapp.com/api/* | TG-API | REST API |

| myapp.com/images/* | TG-Images | Image Service |

📊 9. Monitoring and Logging

| Feature | Purpose |

|---|---|

| CloudWatch Metrics | Monitor request count, latency, and target health |

| Access Logs (S3) | Store detailed request/response data |

| AWS X-Ray | Trace requests end-to-end |

| Health Checks | Identify and isolate failed instances |

🔒 10. Security and Compliance

- Use HTTPS listeners with SSL certificates (ACM)

- Integrate with AWS WAF to block attacks

- Restrict traffic via Security Groups / Network ACLs

- Enforce TLS 1.2 or higher

💼 11. Real-World Use Cases

| Use Case | Example |

|---|---|

| Web Applications | Distribute web server traffic |

| Microservices | Path-based routing to multiple backends |

| ECS Containers | Dynamic service discovery |

| API Gateway Alternative | Host REST APIs behind ALB |

| Hybrid Apps | Integrate EC2 + Lambda |

⚖️ 12. Advantages & Limitations

| Advantages | Limitations |

|---|---|

| Layer 7 intelligent routing | Higher cost than CLB |

| SSL offloading | No TCP/UDP direct support |

| Native container support | No static IP (use NLB for that) |

| Auto-scaling & fault tolerance | Complex for small apps |

🧠 13. ALB vs NLB vs CLB Comparison

| Feature | ALB | NLB | CLB |

|---|---|---|---|

| Layer | 7 | 4 | 4/7 |

| Protocol | HTTP/HTTPS | TCP/UDP | HTTP/HTTPS |

| SSL Termination | ✅ | ❌ | ✅ |

| Host/Path Routing | ✅ | ❌ | ❌ |

| WebSocket Support | ✅ | ✅ | ❌ |

| Health Checks | HTTP/HTTPS | TCP | HTTP/HTTPS |

| Use Case | Web apps, APIs | Low-latency apps | Legacy setups |

- ALB operates at Layer 7 (Application Layer).

- Supports path, host, and header-based routing.

- Integrates with ECS, Lambda, WAF, ACM.

- Offers SSL termination, auto-scaling, and health checks.

- Ideal for modern, microservice-based web applications.

3.2. What is a Network Load Balancer (NLB)?

A Network Load Balancer (NLB) operates at Layer 4 (Transport Layer) and efficiently distributes

incoming TCP, UDP, or TLS traffic across multiple targets (EC2, IPs, Containers, or On-prem servers).

It is built for high performance, ultra-low latency, and massive scalability — capable of handling

millions of requests per second.

🌩️ Why Use a Network Load Balancer?

- ⚡ High-performance – Handles sudden traffic spikes with ease.

- 🧩 Low-latency – Works at the connection (network) level.

- 🧱 Highly available – Spreads load across multiple Availability Zones.

- 🔐 Secure – Supports static IPs and TLS offloading.

- 🔁 Reliable – Automatically reroutes traffic to healthy targets.

✅ Best For: Real-time applications like gaming, IoT, VoIP, and financial trading systems.

🧱 Types of AWS Load Balancers

| Type | Layer | Protocol | Use Case |

|---|---|---|---|

| Application Load Balancer (ALB) | Layer 7 | HTTP/HTTPS | Web apps, APIs |

| Network Load Balancer (NLB) | Layer 4 | TCP/UDP/TLS | Real-time, low latency apps |

| Classic Load Balancer (CLB) | Layer 4 & 7 | HTTP/TCP | Legacy workloads |

🌐 NLB Architecture Overview

Internet

↓

┌──────────────┐

│ NLB (Static IP) │ ← Distributes TCP/UDP traffic

└──────────────┘

↓

┌────────────┴────────────┐

│ │

EC2-1 (Target) EC2-2 (Target)

- 1️⃣ Clients connect to NLB via DNS name or static IP.

- 2️⃣ NLB receives TCP/UDP/TLS traffic.

- 3️⃣ NLB forwards to healthy targets in target groups.

- 4️⃣ Targets respond directly back to clients.

🧩 Key Components of NLB

| Component | Description |

|---|---|

| Load Balancer | Main entry point for all incoming traffic. |

| Listener | Defines protocol & port (e.g., TCP:80, TLS:443, UDP:53). |

| Target Group | Collection of EC2s, IPs, or ECS containers. |

| Health Check | Monitors targets’ availability regularly. |

| Elastic IPs (EIPs) | Assigns static public IPs for consistent access. |

🎯 Listener and Target Groups

Listeners: Accept incoming traffic and forward to target groups.

Target Groups: Contain EC2 instances or IPs where traffic is sent.

| Listener | Target Group | Description |

|---|---|---|

| TCP:80 | TG-Web | Handles web traffic |

| UDP:53 | TG-DNS | DNS or gaming traffic |

| TLS:443 | TG-SecureApp | Encrypted HTTPS traffic |

❤️ Health Checks

NLB regularly checks target health before sending traffic.

Only healthy targets receive traffic.

🧠 NLB Features

- ⚙️ Layer 4 Load Balancing – routes traffic based on IP & port.

- 📡 Static IP Support – assign Elastic IPs per AZ.

- 🔐 TLS Termination – offloads encryption via ACM certificates.

- 🌍 Cross-Zone Balancing – evenly distributes across AZs.

- 👁️ Preserve Source IP – see real client IPs in logs.

- 🔗 Integrates with EC2, ECS, Global Accelerator, and CloudWatch.

- 🚀 Handles millions of requests per second.

🧰 Steps to Create an NLB (Console)

- Open EC2 Dashboard → Load Balancers → Create Load Balancer

- Select Network Load Balancer

- Set name, scheme (Internet-facing/Internal), and IP type (IPv4)

- Add listeners (TCP:80, TLS:443)

- Choose Availability Zones & assign Elastic IPs

- Create Target Group → Type: Instances/IP → Health check: TCP/HTTP

- Register targets (EC2s)

- Review & Create

💻 AWS CLI Example

aws elbv2 create-load-balancer \

--name my-nlb-demo \

--type network \

--subnets subnet-123456 subnet-789012 \

--scheme internet-facing \

--ip-address-type ipv4

📊 Real-World Use Cases

| Use Case | Protocol | Target | Description |

|---|---|---|---|

| Web Server Load Balancing | TCP:80 | EC2 | Distribute web requests |

| Database Cluster | TCP:3306 | RDS/MySQL | Balance DB replicas |

| Gaming/DNS Server | UDP:53 | EC2 | Handle real-time traffic |

| Secure App | TLS:443 | EC2 | Encrypted connections |

🔐 Security & Monitoring

- Use Security Groups for targets

- Enable TLS (port 443) for encryption

- Restrict inbound ports

- Integrate with CloudWatch, WAF, and IAM

⚖️ ALB vs NLB vs CLB

| Feature | ALB | NLB | CLB |

|---|---|---|---|

| Layer | 7 (Application) | 4 (Transport) | 4 & 7 |

| Protocol | HTTP/HTTPS | TCP/UDP/TLS | HTTP/HTTPS/TCP |

| Routing | URL/Host/Header | Port/IP-based | Basic |

| Performance | Moderate | Very High | Low |

| Static IP | ❌ | ✅ | ❌ |

| SSL Termination | ✅ | ✅ | ✅ |

| WebSocket Support | ✅ | ✅ | ❌ |

| Health Check | HTTP/HTTPS | TCP/HTTP | HTTP/HTTPS |

🧠 Summary

- NLB operates at Layer 4 for TCP, UDP, and TLS traffic.

- Supports static IPs and preserves source IPs.

- Provides ultra-high performance and low latency.

- Best suited for real-time, gaming, IoT, and financial systems.

2.6 AWS Auto Scaling Group (ASG)

1️⃣ What is an Auto Scaling Group (ASG)?

An Auto Scaling Group (ASG) is an AWS service that automatically manages the number of EC2 instances in your environment based on demand.

- Ensures the desired number of instances are always running.

- Automatically scales out when load increases and scales in when load decreases.

- Replaces unhealthy instances automatically.

💡 Think of ASG as your application’s self-healing and auto-growing system.

2️⃣ Why Use Auto Scaling Groups?

| Reason | Description |

|---|---|

| High Availability | Keeps your app running even if instances fail. |

| Scalability | Automatically adjusts capacity based on demand. |

| Fault Tolerance | Launches new instances in healthy AZs. |

| Cost Optimization | Removes unused instances when traffic is low. |

| Automation | No manual management required. |

3️⃣ Core Components of Auto Scaling

| Component | Description |

|---|---|

| Launch Template / Config | Defines instance settings (AMI, type, key, etc.). |

| Auto Scaling Group | Defines number and location of instances. |

| Scaling Policies | Decide when to scale in or out. |

| CloudWatch Alarms | Trigger scaling actions based on metrics. |

| Load Balancer | Distributes traffic across instances. |

4️⃣ How Auto Scaling Works (Overview)

+--------------------------------------+

| CloudWatch Alarm (Trigger) |

+--------------------------------------+

|

v

+-----------------------------------+

| Scaling Policy (Condition) |

+-----------------------------------+

|

v

+-----------------------------------+

| Auto Scaling Group (ASG) |

| - Desired Capacity |

| - Min / Max Size |

| - Launch Template |

+-----------------------------------+

|

v

+-----------------------------------+

| EC2 Instances (Running) |

+-----------------------------------+

Example: If CPU > 80% for 5 minutes → ASG adds 2 EC2s.

If CPU < 20% for 10 minutes → ASG removes 1 instance.

5️⃣ Launch Template (Heart of ASG)

- AMI ID, Instance Type, Key Pair

- Security Groups, IAM Role, EBS Size, User Data

aws ec2 create-launch-template \

--launch-template-name my-launch-template \

--version-description "v1" \

--launch-template-data '{

"ImageId":"ami-0abcdef1234567890",

"InstanceType":"t2.micro",

"KeyName":"my-key",

"SecurityGroupIds":["sg-0abc1234"],

"UserData":"IyEvYmluL2Jhc2gKc3VkbyB5dW0gaW5zdGFsbCBodHRwZCAteQ=="

}'

6️⃣ Key Settings in ASG

| Setting | Description |

|---|---|

| Launch Template | Defines EC2 config. |

| VPC & Subnets | Specifies network placement. |

| Load Balancer | Optional, for traffic distribution. |

| Desired / Min / Max Size | Controls scaling limits. |

| Health Checks | EC2 or ELB-based instance health. |

7️⃣ Scaling Policies

| Type | Description | Example |

|---|---|---|

| Target Tracking | Keeps metric near target. | CPU 60% |

| Simple Scaling | Single threshold. | Add 1 if CPU > 80% |

| Step Scaling | Incremental scaling. | Add 1 if >70%, 2 if >90% |

| Scheduled | Time-based. | Add 3 at 9 AM daily |

8️⃣ CloudWatch Integration

- Metrics:

CPUUtilization,NetworkIn/Out,RequestCount - Triggers scaling actions via alarms

aws cloudwatch put-metric-alarm \

--alarm-name "HighCPU" \

--metric-name CPUUtilization \

--namespace AWS/EC2 \

--threshold 70 \

--comparison-operator GreaterThanThreshold \

--evaluation-periods 2

9️⃣ Instance Life Cycle

| State | Description |

|---|---|

| Pending | Launching |

| InService | Running |

| Terminating | Scaling in |

| Terminated | Removed |

| Standby | Paused but running |

🔟 Health Checks

- EC2 health – instance system checks

- ELB health – traffic response

- Custom health – user scripts/metrics

💡 Self-healing infrastructure: unhealthy instances auto-replaced.

💻 Step-by-Step: Creating ASG (Console)

- Create Launch Template (define AMI, type, SG, User Data)

- Create Auto Scaling Group (set min, max, desired, attach ALB)

- Test Scaling (stress test CPU to trigger scale out)

- Verify & Cleanup (delete ASG and template)

12️⃣ ASG + ALB Integration

Instances auto-register to Target Group and receive balanced traffic.

13️⃣ Monitoring & Logging

| Tool | Purpose |

|---|---|

| CloudWatch | Monitor instance and scaling metrics |

| Activity History | Records scaling events |

| CloudTrail | Tracks API calls |

| SNS | Send notifications |

14️⃣ Advanced Features

- Instance Refresh – auto-upgrade AMI

- Warm Pools – standby instances

- Lifecycle Hooks – custom actions during launch/terminate

- Mixed Instances Policy – combine Spot + On-Demand

- Predictive Scaling – uses ML for pre-scaling

15️⃣ Real-World Use Cases

| Use Case | Example |

|---|---|

| Web Servers | Scale websites with traffic |

| E-commerce | Handle sales surges |

| CI/CD Deployments | Replace old instances |

| Security Labs | Multiple load servers |

| Microservices | Scale each service independently |

16️⃣ Best Practices

- ✅ Use multiple AZs for fault tolerance

- ✅ Attach ALB for load balancing

- ✅ Use Target Tracking for simplicity

- ✅ Enable termination protection

- ✅ Prefer Launch Templates

- ✅ Define grace periods correctly

- ✅ Use least-privilege IAM roles

17️⃣ Common CLI Commands

# Create Launch Template

aws ec2 create-launch-template --launch-template-name my-template --version-description v1 --launch-template-data file://template.json

# Create ASG

aws autoscaling create-auto-scaling-group \

--auto-scaling-group-name my-asg \

--launch-template LaunchTemplateName=my-template,Version=1 \

--min-size 1 --max-size 4 --desired-capacity 2 \

--vpc-zone-identifier "subnet-abc,subnet-def"

18️⃣ Troubleshooting

| Issue | Cause | Fix |

|---|---|---|

| Instances not launching | Invalid AMI/key pair | Check template |

| No scaling | Policy not triggered | Review CloudWatch |

| Instances unhealthy | Wrong health check | Update path |

| Too frequent scaling | Short cooldown | Increase cooldown |

19️⃣ Summary

✅ ASG automates EC2 scaling and healing.

✅ Works with Launch Templates, CloudWatch, ALB.

✅ Ensures cost-optimized, resilient infrastructure.

✅ Core for production-grade AWS deployments.

2.7 Amazon VPC Concepts (Subnets, Route Tables, Gateways)

Amazon VPC (Virtual Private Cloud) is your own private network inside AWS. It allows you to control networking just like on-premises, but with cloud flexibility.

🟦 1. What is a VPC?

A VPC is an isolated virtual network you create inside AWS. You decide:

- How many subnets you want

- Which resources are public or private

- How traffic flows using route tables

- How to connect to the internet or on-premises

Your AWS Account

└── VPC (Your Private Network)

├── Subnets

├── Route Tables

├── Gateways

├── Security Groups

└── NACLs

🟩 2. Subnets – Dividing Your VPC into Small Areas

A subnet is a smaller section inside your VPC. You divide your VPC into multiple subnets to separate your resources.

🔹 Types of Subnets

- Public Subnet – Accessible from the internet (via Internet Gateway)

- Private Subnet – NOT accessible directly from the internet

🔹 What goes in a Public Subnet?

- Web servers (EC2)

- Load balancers

- Bastion hosts

🔹 What goes in a Private Subnet?

- Databases (RDS)

- Application servers

- Internal backend services

- Cache servers

🌍 Subnet Diagram

VPC (10.0.0.0/16)

|

├── Public Subnet (10.0.1.0/24) → Internet Allowed

└── Private Subnet (10.0.2.0/24) → No Direct Internet

🟥 3. Route Tables – Navigation Map for Your Subnets

A Route Table contains a set of rules that decide where network traffic goes.

🔹 Example Route Table (Public Subnet)

| Destination | Target |

|---|---|

| 10.0.0.0/16 | local |

| 0.0.0.0/0 | Internet Gateway (IGW) |

🔹 Example Route Table (Private Subnet)

| Destination | Target |

|---|---|

| 10.0.0.0/16 | local |

| 0.0.0.0/0 | NAT Gateway |

🗺️ Route Table Diagram

Public Subnet

↓

Internet Gateway → Internet

Private Subnet

↓

NAT Gateway → Internet (OUTBOUND ONLY)

🟨 4. Gateways – Entry & Exit Points

Gateways allow your VPC to communicate with the outside world or your on-prem network.

🟦 4.1 Internet Gateway (IGW)

Allows your VPC to connect to the internet. Required for:

- EC2 public IP access

- Hosting websites

- Inbound internet traffic

🟩 4.2 NAT Gateway

Allows instances in a **private subnet** to access the internet **only for outbound traffic** (e.g., downloading updates).

🟥 4.3 VPC Peering

Connects two VPCs so they can communicate

🟧 4.4 VPN Gateway / Direct Connect

Connects your AWS VPC to your On-Premises Data Center securely.

- VPN Gateway → Encrypted connection over the internet

- Direct Connect → Private dedicated high-speed connection

🌐 5. Full VPC Diagram (Very Easy)

+---------------------+

| VPC |

| 10.0.0.0/16 |

+---------------------+

/ \

/ \

+------------------+ +------------------+

| Public Subnet | | Private Subnet |

| 10.0.1.0/24 | | 10.0.2.0/24 |

+------------------+ +------------------+

| |

| |

+-----------------+ +---------------------+

| EC2 Public | | EC2 Private |

+-----------------+ +---------------------+

| |

| +---------------+

+-----------------+ | NAT Gateway |

| Internet Gateway| +---------------+

+-----------------+ |

| |

Internet Internet (Only Outbound)

- VPC = Your private network in AWS

- Subnets = Divide your network (public/private)

- Route Tables = Decide traffic direction

- IGW = Allows internet access for public subnets

- NAT Gateway = Allows private subnets to reach the internet (outbound only)

- VPN/DC = Connect AWS to on-premises

2.7a CIDR Blocks & IP Addressing (IPv4/IPv6)

CIDR (Classless Inter-Domain Routing) defines how many IP addresses you have inside your VPC or Subnets. Understanding CIDR is crucial for planning AWS networks effectively.

The “/16” defines how many total IPs you get.

🟦 1. Understanding IPv4 CIDR Notation

IPv4 addresses are 32-bit numbers written as four octets (x.x.x.x). The CIDR suffix (like /16 or /24) tells us how many bits are fixed for the network.

🔹 Common CIDR Blocks

| CIDR | Total IPs | Usable IPs | Usage |

|---|---|---|---|

| /16 | 65,536 | 65,534 | Entire VPC |

| /20 | 4,096 | 4,094 | Large Subnet |

| /24 | 256 | 254 | Most Common Subnet Size |

| /28 | 16 | 14 | Small Subnet |

• First IP → Network Address

• Second IP → AWS VPC Router

• Third IP → Reserved for future use

• Last two IPs → Broadcast & Reserved

✔ Usable IPs = Total IPs − 5

🟩 2. CIDR Example: 10.0.1.0/24

This CIDR block is commonly used for public subnets.

| Info | Value |

|---|---|

| Network Range | 10.0.1.0 – 10.0.1.255 |

| Total IPs | 256 |

| Usable IPs | 254 (AWS reserves 5) |

| Subnet Mask | 255.255.255.0 |

🔹 Visual Diagram

10.0.1.0/24 → 256 IPs

Reserved by AWS:

10.0.1.0 → Network

10.0.1.1 → VPC Router

10.0.1.2 → Reserved

10.0.1.255 → Broadcast

Usable range:

10.0.1.3 → 10.0.1.254

🟥 3. IPv6 Overview (Optional)

IPv6 is a 128-bit addressing format providing an extremely large number of IPs. AWS VPC IPv6 ranges look like:

Example IPv6 CIDR: 2600:1f18:abcd:1234::/56

🟨 4. How to Subnet a VPC

Example: VPC = 10.0.0.0/16 → We divide it into smaller subnets.

| Subnet Name | CIDR | IPs | Purpose |

|---|---|---|---|

| public-subnet | 10.0.1.0/24 | 254 | Internet-facing resources |

| private-subnet | 10.0.2.0/24 | 254 | DBs, App servers |

🔹 Subnetting Diagram

VPC: 10.0.0.0/16

├── 10.0.1.0/24 → Public Subnet

├── 10.0.2.0/24 → Private Subnet

└── More subnets (10.0.X.0/24)

🧮 5. CIDR Calculator – Subnet Sizes & IP Count

This table helps you quickly understand how many IP addresses are available in each subnet size (CIDR prefix). Very useful for VPC and Subnet design.

| CIDR Prefix | Total IPs | Usable IPs (Total - 5) | Subnet Mask | Typical Usage |

|---|---|---|---|---|

| /16 | 65,536 | 65,531 | 255.255.0.0 | Entire VPC |

| /17 | 32,768 | 32,763 | 255.255.128.0 | Large Subnets |

| /18 | 16,384 | 16,379 | 255.255.192.0 | Large Private Subnets |

| /19 | 8,192 | 8,187 | 255.255.224.0 | Medium Subnets |

| /20 | 4,096 | 4,091 | 255.255.240.0 | App Subnets |

| /21 | 2,048 | 2,043 | 255.255.248.0 | DB Subnets |

| /22 | 1,024 | 1,019 | 255.255.252.0 | Batch Systems |

| /23 | 512 | 507 | 255.255.254.0 | Medium Networks |

| /24 | 256 | 251 | 255.255.255.0 | Most Common Subnet |

| /25 | 128 | 123 | 255.255.255.128 | Small Subnet |

| /26 | 64 | 59 | 255.255.255.192 | Testing / Lab |

| /27 | 32 | 27 | 255.255.255.224 | Containers, ENIs |

| /28 | 16 | 11 | 255.255.255.240 | Small Private Subnets |

| /29 | 8 | 3 | 255.255.255.248 | Point-to-Point Links |

| /30 | 4 | - | 255.255.255.252 | Routing Links |

- CIDR controls how many IPs are available in VPC/Subnets

- /16 = Big network, /24 = Common subnet

- AWS reserves 5 IPs in every subnet

- IPv4 is preferred for most VPC setups

- IPv6 is optional and not needed for beginners

2.7b Create VPC with EC2 (Full Step-by-Step Guide)

In this section, you will learn how to manually build an AWS VPC from scratch, configure subnets, route tables, and internet connectivity, and finally launch an EC2 instance inside the Public Subnet. This guide is 100% practical and beginner-friendly.

🟧 VPC Only – Creating a Custom VPC Manually

AWS provides two creation modes:

✔ VPC Only – Creates only the VPC (you configure all components manually)

✔ VPC and More – Automatically creates subnets, IGW, NAT, routes, etc.

Here we use VPC Only for full control and better understanding.

- Public & Private Subnets

- Internet Gateway (IGW)

- Route Tables

- NAT Gateway (Optional)

- Security Groups & NACLs

🔹 Step 1: Create VPC (VPC Only)

- Go to VPC Console → Click Create VPC

- Select → VPC Only

| Field | Value | Description |

|---|---|---|

| Name | project-vpc | Easy reference name |

| IPv4 CIDR block | 10.0.0.0/16 | Large block (65,536 IPs) |

| IPv6 | No IPv6 | Beginner friendly |

| Tenancy | Default | Free tier supported |

🟦 Step 2: Create Subnets (Public & Optional Private)

A VPC must have at least one subnet. We create one public and one optional private subnet.

🔹 Public Subnet

- Select VPC ID → project-vpc

- Name: public-subnet

- IPv4 CIDR: 10.0.1.0/24

- AZ: ap-south-1a

✔ You selected correct VPC → project-vpc

✔ You entered subnet CIDR → 10.0.1.0/24

✔ Availability Zone → ap-south-1a

✔ Name tag added correctly → public-subnet

Enable Auto-Assign Public IP:

- Select the subnet

- Click Edit Subnet Settings

- Enable → Auto-assign IPv4 public address

🔹 Private Subnet (optional)

- Name: private-subnet

- IPv4 CIDR: 10.0.2.0/24

🟥 Step 3: Create & Attach Internet Gateway (IGW)

- Go to Internet Gateways

- Click Create Internet Gateway

- Name → project-igw

- Click → Create Internet Gateways

- ✅ Internet Gateway created successfully!

- Select the Internet Gateway → project-igw

- Click Actions → Attach to VPC

- From dropdown → Select project-vpc

- Click → Attach Internet Gateway

🟨 Step 4: Create Public Route Table

🔹 Create Route Table

- Go to Route Tables in the VPC Dashboard

- Click Create Route Table

- Enter Name → public-rt

- Select VPC → project-vpc

- Click → Create route table

-

✅ Route table (public-rt) was created successfully!

(As shown in your screenshot)

🔹 Add Route to Internet (0.0.0.0/0)

- Select the route table → public-rt

- Click Edit routes

- You will see the default route:

10.0.0.0/16 → local (auto-created) - Click Add route

- Destination → 0.0.0.0/0

- Target → Internet Gateway

- Select your IGW → igw-01246013decfc63a2 (project-igw)

- Click Save changes

| Destination | Target |

|---|---|

| 10.0.0.0/16 | local |

| 0.0.0.0/0 | Internet Gateway (project-igw) |

✔ Route table public-rt was created successfully

✔ Default route exists: 10.0.0.0/16 → local (Active)

✔ You added: 0.0.0.0/0 → Internet Gateway (igw-01246013decfc63a2)

✔ Status shows Active

👍 Your public route table is properly configured!

🔹 Associate Public Subnet

- Select the route table → public-rt

- Open → Subnet Associations

- Click Edit

- Select your subnet → public-subnet

- Click Save associations

This means EC2 instances inside this subnet will get internet access (with public IP).

🟦 Step 5: Launch EC2 Instance in Public Subnet

- Open EC2 Console → Click Launch Instance

- Name → project-ec2-public

- Select AMI → Amazon Linux 2 / Ubuntu

- Instance Type → t2.micro

- Select/Create Key Pair

Network Settings

- VPC → project-vpc

- Subnet → public-subnet

- Auto-assign Public IP → Enabled

- Security Group:

- Allow SSH (22) from MY IP

- Allow HTTP (80)

✔ Test SSH Connection

ssh -i mykey.pem ec2-user@YOUR_PUBLIC_IP

🧠 Final Architecture Diagram

VPC (10.0.0.0/16)

|

├── Public Subnet (10.0.1.0/24)

│ ├── EC2 Instance (Public IP)

│ └── Route → Internet Gateway

|

└── Private Subnet (10.0.2.0/24)

└── Internal Backend / DB (Optional)

2.8 AWS Direct Connect & VPN (Easy & Detailed Explanation)

When companies move to AWS, they often need a secure and reliable way to connect their on-premises network (office/datacenter) to their AWS VPC (cloud network). AWS provides two main options: AWS VPN and AWS Direct Connect.

🟦 1. AWS Site-to-Site VPN

A Site-to-Site VPN creates an encrypted connection between your on-premises router and AWS VPC over the public internet.

🔹 How AWS VPN Works

- Your office router connects to AWS

- AWS provides a Virtual Private Gateway (VGW)

- Both sides create an IPSec encrypted tunnel

- Traffic flows securely between office and AWS

🔹 VPN Diagram (Simple)

Office Network (Router/Firewall)

|

Encrypted IPSec Tunnel

|

+------------------------+

| AWS Virtual Private |

| Gateway (VGW) |

+------------------------+

|

VPC

🔹 VPN Advantages

- 🤑 Very low cost

- ⚡ Quick setup (10–15 minutes)